最近很多手机厂商在宣传AI 手机的概念, 那么AI 手机怎么来测量呢? 到底哪家的AI手机最强大? 现在来看似乎并没有统一的测量标准,刚好我最近在研究手机运行大模型的时候,发现腾讯公司开源的NCNN框架, 这是一个高质量的专门针对移动平台的开源的神经网络推理框架; 进一步研究还发现, 这个NCNN 框架还有一个基准框架benchnn, 这个基准测试,很适合用来测量手机的性能, 尤其是针对当前火热的AI 手机的概念, 完全可以用这个NCNN的基准测试结果来衡量。

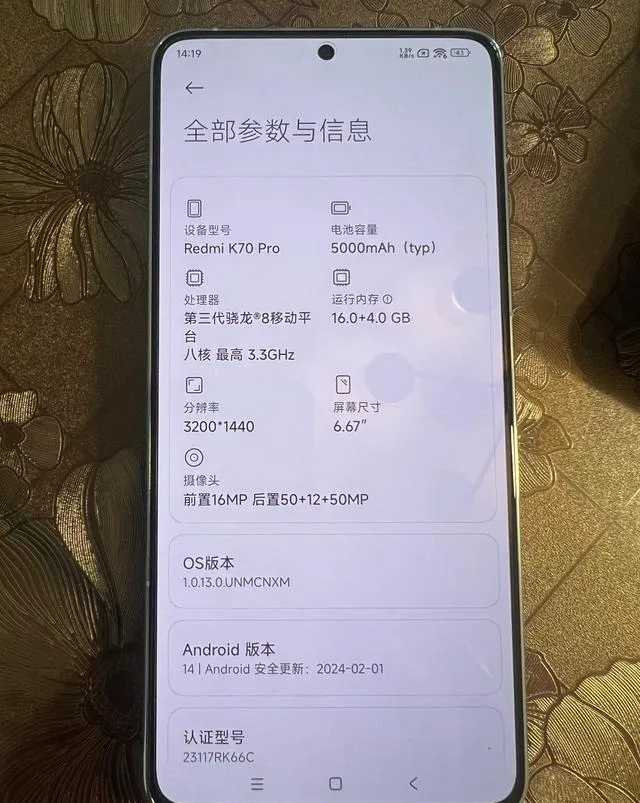

因此记录下来ncnn的基准测试执行方法, 并以骁龙8 第三代处理器为对象,在红米手机K70 Pro 上完成测试。

下面请看详细的评测方法和结果。

首先, 来了解(回顾)一下腾讯的这个ncnn框架。

一、什么是ncnn

ncnn

ncnn是腾讯优图实验室开源的、专门针对移动平台优化的高性能神经网络推理框架,项目地址 github.com/Tencent/ncnn。

这里是腾讯的官方介绍:

ncnn 是一个为手机端极致优化的高性能神经网络前向计算框架。 ncnn 从设计之初深刻考虑手机端的部署和使用。 无第三方依赖,跨平台,手机端 cpu 的速度快于目前所有已知的开源框架。 基于 ncnn,开发者能够将深度学习算法轻松移植到手机端高效执行, 开发出人工智能 APP,将 AI 带到你的指尖。 ncnn 目前已在腾讯多款应用中使用,如:QQ,Qzone,微信,天天 P 图等。

功能方面, ncnn也是非常强大,这里的功能点很多,简单罗列:

功能概述

支持卷积神经网络,支持多输入和多分支结构,可计算部分分支

无任何第三方库依赖,不依赖 BLAS/NNPACK 等计算框架

纯 C++ 实现,跨平台,支持 Android / iOS 等

ARM Neon 汇编级良心优化,计算速度极快

精细的内存管理和数据结构设计,内存占用极低

支持多核并行计算加速,ARM big.LITTLE CPU 调度优化

支持基于全新低消耗的 Vulkan API GPU 加速

可扩展的模型设计,支持 8bit 量化 和半精度浮点存储,可导入 caffe/pytorch/mxnet/onnx/darknet/keras/tensorflow(mlir) 模型

支持直接内存零拷贝引用加载网络模型

可注册自定义层实现并扩展

当然,这是一个供app使用的底层框架, 一般人在使用app的时候是感知不到这个框架存在的,但是对于开发者而言,借助于NCNN框架,可以很方便的开发具备AI 功能的App, 下面是一些借助于ncnn的项目列表,大家可以参考看看有没有自己能用到的项目

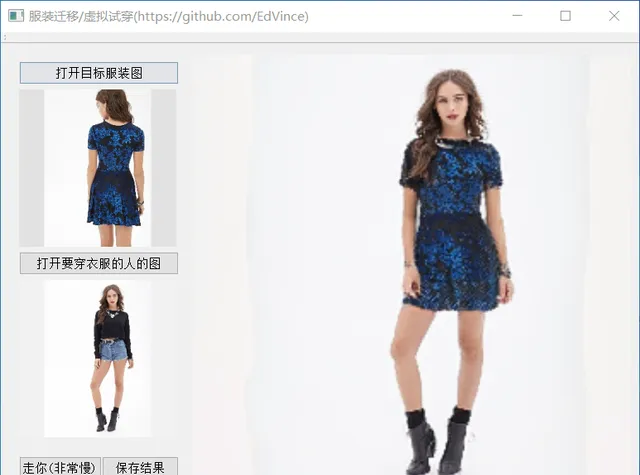

GitHub - EdVince/ClothingTransfer-NCNN: CT-Net, OpenPose, LIP_JPPNet, DensePose running with ncnn⚡服装迁移/虚拟试穿⚡ClothingTransfer/Virtual-Try-On⚡: 基于ncnn实现的服装迁移、虚拟试穿项目, 可以让模特试穿不同的服装,

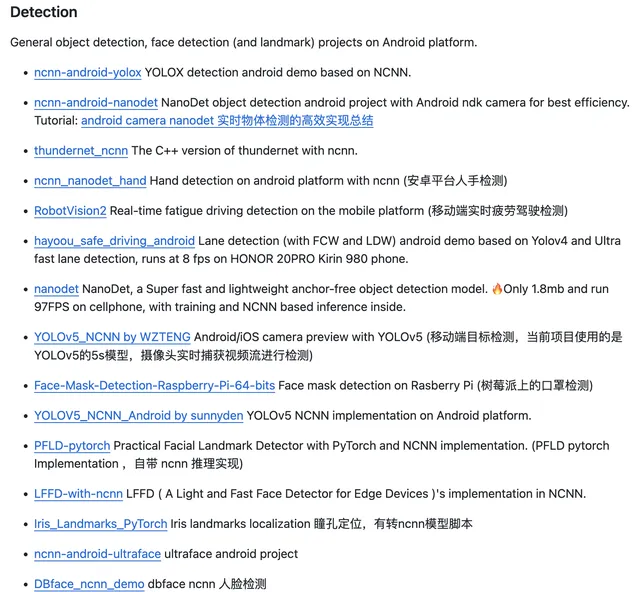

目标检测类:

这些app就很多了, 基本的架构就是yolox/opencv+ncnn, 目标检测算法使用yolo, 在安卓手机上运行的时候就借助于NCNN, 这里的项目特别多,截图如下:

可以看到有目标检测,实时物体检测,人脸检测,人手检测,实时疲劳驾驶检测,口罩检测,居然还有瞳孔定位,有兴趣的同学可以到这个网址进一步查看 https://github.com/zchrissirhcz/awesome-ncnn

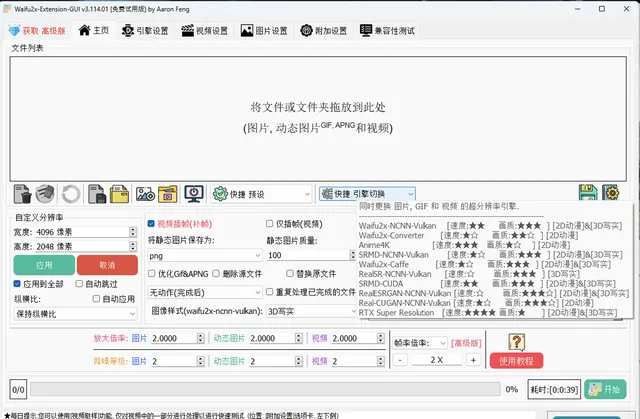

超级分辨率类:

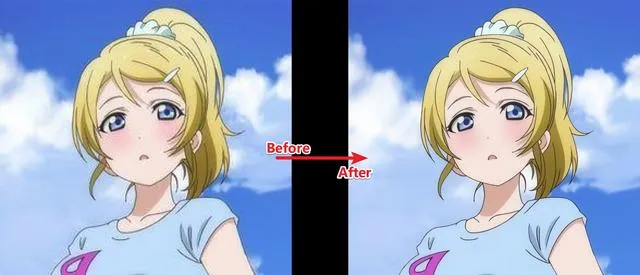

借助于ncnn框架实现对图片/视频的分辨率提升,

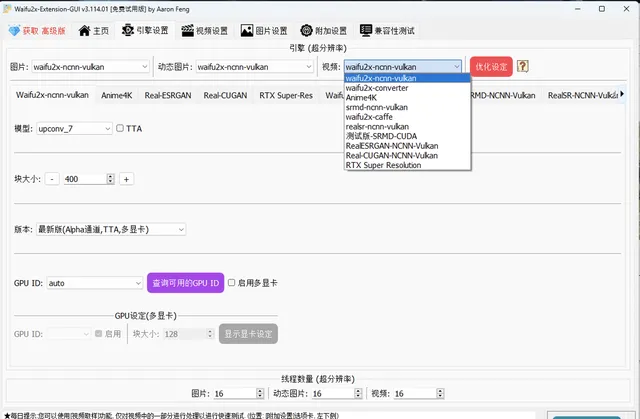

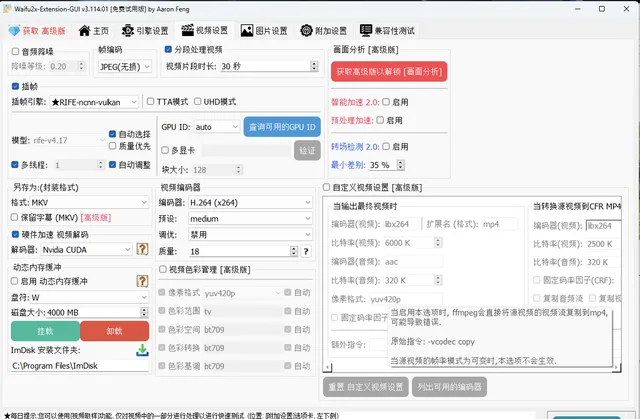

这里重点推荐Waifu2x-Extension-GUI 这个项目, 这个项目可以实现对视频、图像和 GIF 放大/放大(超分辨率)和视频帧插值。通过 Waifu2x、Real-ESRGAN、Real-CUGAN、RTX 视频超分辨率 VSR、SRMD、RealSR、Anime4K、RIFE、IFRNet、CAIN、DAIN 和 ACNet 实现。

界面很朴素, 但是功能很强大:

主页界面

像素提升(upscale):

图片放大:

居然还有gif 格式的放大:

还有视频的放大:

原始视频(360P):

放大视频(1440P):

怎么样,有没有兴趣试用一下这个工具? 国产软件,值得推荐啊!

二、为什么选用NCNN 基准测试benchnn来测量AI 手机?

前面讲了很多ncnn的例子,目的是介绍ncnn的强大和应用的广泛性, 实际上作为2017年国内最先开源的神经网络推理框架, ncnn确实是有很多项目在使用,那么为什么就选用ncnn作为测验AI 手机的标准呢? 其实主要还是ncnn 这个框架自身的特性,

先来看看腾讯的文档:

功能概述

支持卷积神经网络,支持多输入和多分支结构,可计算部分分支

无任何第三方库依赖,不依赖 BLAS/NNPACK 等计算框架

纯 C++ 实现,跨平台,支持 Android / iOS 等

ARM Neon 汇编级良心优化,计算速度极快

精细的内存管理和数据结构设计,内存占用极低

支持多核并行计算加速,ARM big.LITTLE CPU 调度优化

支持基于全新低消耗的 Vulkan API GPU 加速

可扩展的模型设计,支持 8bit 量化 和半精度浮点存储,可导入 caffe/pytorch/mxnet/onnx/darknet/keras/tensorflow(mlir) 模型

支持直接内存零拷贝引用加载网络模型

可注册自定义层实现并扩展

从这上面的介绍我们可以看到, ncnn框架整体上没有第三方的依赖,非常的纯净, 而且对于内存管理、ARM Neon 架构有着非常极致的优化, 这一点国外的开发人员也比较羡慕~~~

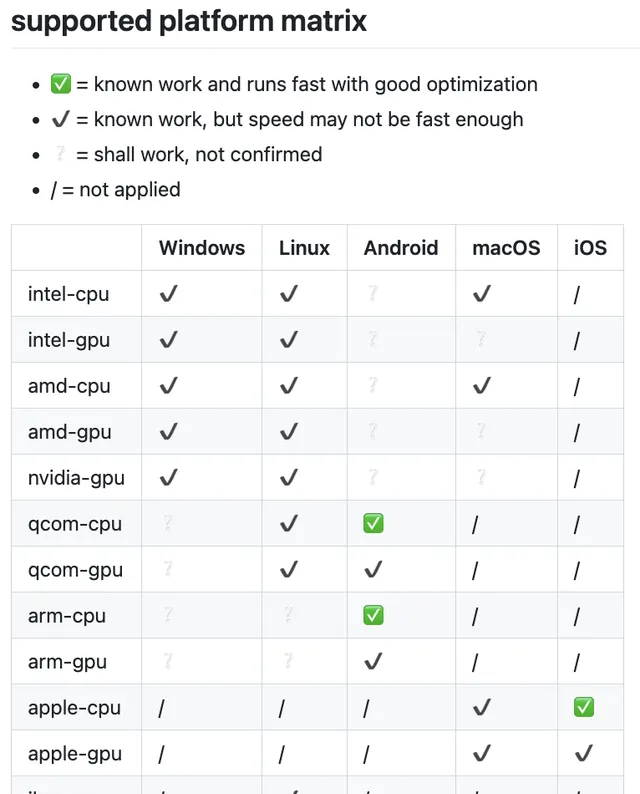

另外一点是,ncnn的兼容性非常之好, 几乎支持所有的平台:

比较具体的支持列表, 华为的鸿蒙OS 赫然在列,强!

基于此, 选用ncnn框架来衡量AI 手机的强弱,就很好理解了, 基本上就接受性和广泛使用程度而言, 目前除了onnx 之外,就只有ncnn了, 这里我们当然要选用ncnn了(向nihui大佬致敬~~)

三、ncnn 基准测试benchnn语法

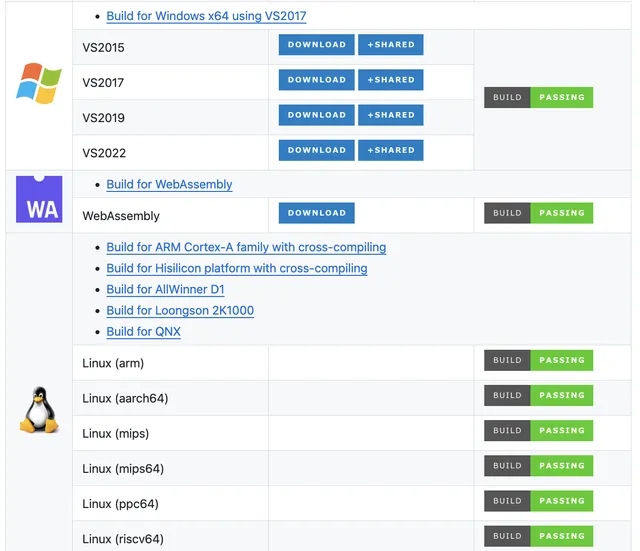

关于ncnn的本地化编译, 我是在安卓系统上编译的,具体的方法是通过termux 的linux 模拟环境里面, 编译ncnn框架, 具体的做法参见学点AI知识:安卓手机上编译运行腾讯的NCNN库

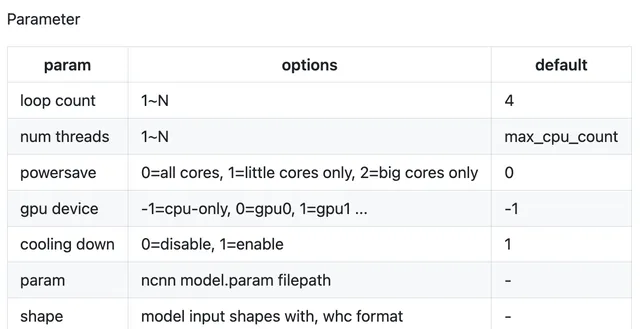

编译之后, 就可以使用benchnn 这个工具了, 语法如下:

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn --help

Usage: benchncnn [loop count] [num threads] [powersave] [gpu device] [cooling down] [(key=value)...]

param=model.param

shape=[227,227,3],...

因为我们是想测验骁龙8 Gen 3这款处理器, 因此先来了解一下这个CPU 的基本信息。

四、骁龙8 Gen 3 基本信息

CPU 基本信息:

CPU Qualcomm® Kryo™ CPU

64-bit Architecture

1 Prime core, up to 3.4 GHz**

Arm Cortex-X4 technology

5 Performance cores, up to 3.2 GHz*

2 Efficiency cores, up to 2.3 GHz*

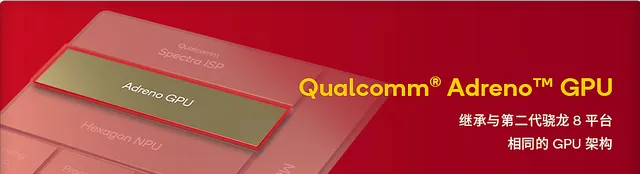

高通官网的GPU 信息:

Qualcomm® AI Engine

Qualcomm® Adreno™ GPU

Qualcomm® Kryo™ CPU

Qualcomm® Hexagon™ NPU:

- Fused AI accelerator architecture

- Hexagon scalar, vector, and tensor accelerators

- Hexagon Direct Link

- Upgraded Micro Tile Inferencing

- Upgraded power delivery system

- Support for mix precision (INT8+INT16)

- Support for all precisions (INT4, INT8, INT16, FP16)

GPU 信息:

adb shell dumpsys SurfaceFlinger | grep GLES

结果:

------------RE GLES------------

GLES: Qualcomm, Adreno (TM) 750, OpenGL ES 3.2 [email protected] (GIT@62c1f322ce, Id0077aad60, 1700555917) (Date:11/21/23)

五、CPU 模式运行benchnn

这是一个例子,使用4个线程,8次循环(loop), 使用CPU(第四个参数gpu 为-1 ):

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 4 0 -1 1loop_count = 8num_threads = 4powersave = 0gpu_device = -1cooling_down = 1squeezenet min = 2.36 max = 2.50 avg = 2.41squeezenet_int8 min = 1.96 max = 2.06 avg = 2.00mobilenet min = 3.95 max = 8.71 avg = 4.64mobilenet_int8 min = 2.23 max = 2.36 avg = 2.30mobilenet_v2 min = 2.80 max = 2.98 avg = 2.89mobilenet_v3 min = 2.63 max = 2.80 avg = 2.72shufflenet min = 2.00 max = 2.20 avg = 2.10shufflenet_v2 min = 1.81 max = 1.98 avg = 1.87mnasnet min = 2.87 max = 3.04 avg = 2.94proxylessnasnet min = 3.23 max = 3.42 avg = 3.31efficientnet_b0 min = 5.33 max = 5.63 avg = 5.44efficientnetv2_b0 min = 6.27 max = 6.65 avg = 6.42regnety_400m min = 5.70 max = 5.83 avg = 5.79blazeface min = 0.71 max = 1.52 avg = 1.09googlenet min = 9.06 max = 9.45 avg = 9.19googlenet_int8 min = 7.16 max = 7.39 avg = 7.27resnet18 min = 6.64 max = 6.84 avg = 6.74resnet18_int8 min = 4.89 max = 5.07 avg = 4.95alexnet min = 7.51 max = 7.60 avg = 7.56vgg16 min = 36.01 max = 36.30 avg = 36.13vgg16_int8 min = 33.57 max = 34.12 avg = 33.81resnet50 min = 18.57 max = 18.96 avg = 18.75resnet50_int8 min = 10.55 max = 10.81 avg = 10.64squeezenet_ssd min = 7.28 max = 7.68 avg = 7.47squeezenet_ssd_int8 min = 6.14 max = 6.82 avg = 6.43mobilenet_ssd min = 8.54 max = 9.06 avg = 8.66mobilenet_ssd_int8 min = 4.93 max = 5.14 avg = 5.02mobilenet_yolo min = 20.36 max = 22.02 avg = 20.80mobilenetv2_yolov3 min = 11.46 max = 11.61 avg = 11.53yolov4-tiny min = 15.20 max = 15.44 avg = 15.33nanodet_m min = 5.14 max = 5.57 avg = 5.28yolo-fastest-1.1 min = 2.40 max = 2.77 avg = 2.52yolo-fastestv2 min = 2.00 max = 2.28 avg = 2.09vision_transformer min = 243.12 max = 248.61 avg = 244.42FastestDet min = 1.88 max = 2.12 avg = 1.97

既然CPU 有8个核心,那么就来8个线程来跑一跑:

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 8 0 -1 1loop_count = 8num_threads = 8powersave = 0gpu_device = -1cooling_down = 1squeezenet min = 7.35 max = 8.88 avg = 7.65squeezenet_int8 min = 4.28 max = 4.41 avg = 4.32mobilenet min = 6.11 max = 7.43 avg = 6.43mobilenet_int8 min = 4.55 max = 4.75 avg = 4.61mobilenet_v2 min = 5.83 max = 6.54 avg = 6.01mobilenet_v3 min = 4.64 max = 4.96 avg = 4.76shufflenet min = 5.65 max = 7.19 avg = 6.00shufflenet_v2 min = 7.20 max = 9.83 avg = 7.83mnasnet min = 4.94 max = 6.56 avg = 5.33proxylessnasnet min = 6.46 max = 9.66 avg = 7.88efficientnet_b0 min = 9.32 max = 10.82 avg = 9.68efficientnetv2_b0 min = 11.19 max = 14.87 avg = 12.11regnety_400m min = 11.15 max = 11.73 avg = 11.31blazeface min = 2.12 max = 2.65 avg = 2.31googlenet min = 17.73 max = 21.76 avg = 18.90googlenet_int8 min = 12.51 max = 13.63 avg = 12.78resnet18 min = 9.45 max = 28.39 avg = 12.39resnet18_int8 min = 9.21 max = 9.61 avg = 9.41alexnet min = 10.60 max = 12.25 avg = 11.10vgg16 min = 53.30 max = 88.63 avg = 59.98vgg16_int8 min = 59.42 max = 95.09 avg = 66.68resnet50 min = 26.97 max = 29.22 avg = 27.72resnet50_int8 min = 18.51 max = 25.78 avg = 20.98squeezenet_ssd min = 12.53 max = 13.09 avg = 12.75squeezenet_ssd_int8 min = 13.05 max = 15.11 avg = 13.47mobilenet_ssd min = 14.60 max = 26.02 avg = 16.76mobilenet_ssd_int8 min = 8.19 max = 8.52 avg = 8.31mobilenet_yolo min = 36.56 max = 72.06 avg = 43.64mobilenetv2_yolov3 min = 16.56 max = 29.45 avg = 19.33yolov4-tiny min = 20.13 max = 23.69 avg = 22.23nanodet_m min = 10.30 max = 13.62 avg = 11.38yolo-fastest-1.1 min = 4.22 max = 5.33 avg = 4.43yolo-fastestv2 min = 4.71 max = 6.93 avg = 5.28vision_transformer min = 339.24 max = 460.96 avg = 393.47FastestDet min = 4.53 max = 6.98 avg = 5.29

六、GPU 模式来运行benchnn

对于GPU,NCNN使用了Vulkan API, 为了确保Vulkan是否正确配置,先运行一下vulkaninfo 这个基本命令:

root@localhost:~/ncnn/benchmark# vulkaninfo --summaryWARNING: [Loader Message] Code 0 : terminator_CreateInstance: Received return code -3 from call to vkCreateInstance in ICD /usr/lib/aarch64-linux-gnu/libvulkan_virtio.so. Skipping this driver.'DISPLAY' environment variable not set... skipping surface infoerror: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.error: XDG_RUNTIME_DIR is invalid or not set in the environment.==========VULKANINFO==========Vulkan Instance Version: 1.3.275Instance Extensions: count = 23-------------------------------VK_EXT_acquire_drm_display : extension revision 1VK_EXT_acquire_xlib_display : extension revision 1VK_EXT_debug_report : extension revision 10VK_EXT_debug_utils : extension revision 2VK_EXT_direct_mode_display : extension revision 1VK_EXT_display_surface_counter : extension revision 1VK_EXT_surface_maintenance1 : extension revision 1VK_EXT_swapchain_colorspace : extension revision 4VK_KHR_device_group_creation : extension revision 1VK_KHR_display : extension revision 23VK_KHR_external_fence_capabilities : extension revision 1VK_KHR_external_memory_capabilities : extension revision 1VK_KHR_external_semaphore_capabilities : extension revision 1VK_KHR_get_display_properties2 : extension revision 1VK_KHR_get_physical_device_properties2 : extension revision 2VK_KHR_get_surface_capabilities2 : extension revision 1VK_KHR_portability_enumeration : extension revision 1VK_KHR_surface : extension revision 25VK_KHR_surface_protected_capabilities : extension revision 1VK_KHR_wayland_surface : extension revision 6VK_KHR_xcb_surface : extension revision 6VK_KHR_xlib_surface : extension revision 6VK_LUNARG_direct_driver_loading : extension revision 1Instance Layers: count = 2--------------------------VK_LAYER_MESA_device_select Linux device selection layer 1.3.211 version 1VK_LAYER_MESA_overlay Mesa Overlay layer 1.3.211 version 1Devices:========GPU0:apiVersion = 1.3.274driverVersion = 0.0.1vendorID = 0x10005deviceID = 0x0000deviceType = PHYSICAL_DEVICE_TYPE_CPUdeviceName = llvmpipe (LLVM 17.0.6, 128 bits)driverID = DRIVER_ID_MESA_LLVMPIPEdriverName = llvmpipedriverInfo = Mesa 24.0.5-1ubuntu1 (LLVM 17.0.6)conformanceVersion = 1.3.1.1deviceUUID = 6d657361-3234-2e30-2e35-xxxxxxxxxxxxdriverUUID = 6c6c766d-7069-7065-5555-xxxxxxxxxxxxroot@localhost:~/ncnn/benchmark#

上面展现的是CPU的推理,现在看看CPU模式下(第四个参数gpu 为0,使用第1个GPU ),benchnn的结果怎么样:

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 4 0 0 1loop_count = 8num_threads = 4powersave = 0gpu_device = 0cooling_down = 1squeezenet min = 2.73 max = 2.83 avg = 2.79squeezenet_int8 min = 2.27 max = 2.37 avg = 2.32mobilenet min = 4.56 max = 4.64 avg = 4.60mobilenet_int8 min = 2.61 max = 2.77 avg = 2.67mobilenet_v2 min = 3.26 max = 3.49 avg = 3.38mobilenet_v3 min = 3.02 max = 3.27 avg = 3.14shufflenet min = 2.31 max = 2.61 avg = 2.46shufflenet_v2 min = 2.11 max = 2.29 avg = 2.20mnasnet min = 3.37 max = 3.51 avg = 3.43proxylessnasnet min = 3.73 max = 4.07 avg = 3.88efficientnet_b0 min = 6.17 max = 6.56 avg = 6.30efficientnetv2_b0 min = 7.21 max = 7.49 avg = 7.35regnety_400m min = 6.71 max = 6.97 avg = 6.80blazeface min = 0.82 max = 0.95 avg = 0.86googlenet min = 10.44 max = 10.74 avg = 10.55googlenet_int8 min = 8.25 max = 8.41 avg = 8.32resnet18 min = 7.47 max = 7.69 avg = 7.54resnet18_int8 min = 5.55 max = 5.78 avg = 5.63alexnet min = 8.20 max = 8.41 avg = 8.27vgg16 min = 40.02 max = 40.73 avg = 40.42vgg16_int8 min = 37.65 max = 42.27 avg = 39.12resnet50 min = 21.32 max = 21.54 avg = 21.43resnet50_int8 min = 12.07 max = 12.38 avg = 12.22squeezenet_ssd min = 8.20 max = 8.63 avg = 8.41squeezenet_ssd_int8 min = 6.88 max = 7.73 avg = 7.27mobilenet_ssd min = 9.83 max = 10.27 avg = 9.93mobilenet_ssd_int8 min = 5.69 max = 5.91 avg = 5.75mobilenet_yolo min = 23.73 max = 24.30 avg = 24.01mobilenetv2_yolov3 min = 13.11 max = 13.43 avg = 13.24yolov4-tiny min = 17.56 max = 19.04 avg = 17.80nanodet_m min = 6.12 max = 6.57 avg = 6.28yolo-fastest-1.1 min = 2.76 max = 3.08 avg = 2.86yolo-fastestv2 min = 2.28 max = 2.44 avg = 2.36vision_transformer min = 284.04 max = 290.75 avg = 287.25FastestDet min = 2.21 max = 2.49 avg = 2.31

跑一个8线程的GPU推理:

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 8 0 1 1loop_count = 8num_threads = 8powersave = 0gpu_device = 1cooling_down = 1squeezenet min = 4.19 max = 12.36 avg = 7.60squeezenet_int8 min = 5.13 max = 6.05 avg = 5.59mobilenet min = 6.64 max = 6.78 avg = 6.70mobilenet_int8 min = 4.24 max = 7.84 avg = 5.12mobilenet_v2 min = 14.45 max = 17.51 avg = 15.52mobilenet_v3 min = 4.72 max = 4.97 avg = 4.84shufflenet min = 4.27 max = 6.32 avg = 4.75shufflenet_v2 min = 5.09 max = 5.29 avg = 5.16mnasnet min = 4.74 max = 5.07 avg = 4.91proxylessnasnet min = 6.64 max = 7.04 avg = 6.77efficientnet_b0 min = 10.49 max = 12.27 avg = 11.07efficientnetv2_b0 min = 21.73 max = 23.92 avg = 22.20regnety_400m min = 15.11 max = 16.45 avg = 15.46blazeface min = 2.04 max = 3.32 avg = 2.30googlenet min = 15.56 max = 16.37 avg = 15.82googlenet_int8 min = 14.33 max = 15.88 avg = 14.93resnet18 min = 10.93 max = 11.40 avg = 11.15resnet18_int8 min = 9.07 max = 9.56 avg = 9.30alexnet min = 11.36 max = 11.91 avg = 11.54vgg16 min = 65.51 max = 82.50 avg = 69.47vgg16_int8 min = 56.88 max = 61.46 avg = 58.33resnet50 min = 27.11 max = 34.45 avg = 30.53resnet50_int8 min = 18.92 max = 20.28 avg = 19.16squeezenet_ssd min = 12.00 max = 16.68 avg = 13.26squeezenet_ssd_int8 min = 13.03 max = 16.40 avg = 14.04mobilenet_ssd min = 13.31 max = 15.48 avg = 14.03mobilenet_ssd_int8 min = 9.52 max = 12.70 avg = 10.24mobilenet_yolo min = 45.97 max = 105.43 avg = 76.82mobilenetv2_yolov3 min = 18.20 max = 21.84 avg = 20.05yolov4-tiny min = 24.67 max = 33.68 avg = 29.67nanodet_m min = 13.36 max = 14.26 avg = 13.87yolo-fastest-1.1 min = 6.96 max = 7.62 avg = 7.17yolo-fastestv2 min = 4.16 max = 7.57 avg = 4.66vision_transformer min = 380.71 max = 469.66 avg = 419.79FastestDet min = 4.61 max = 4.87 avg = 4.69root@localhost:~/ncnn/benchmark#

执行过程:

七、结论

写的太长了, 先简单说一下结论吧, 下一篇再来比较各个芯片之间的强弱:

CPU 模式性能大于 GPU模式

这个其实有点超乎意料, 但是请看第一个CPU模式下执行的结果:

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 4 0 -1 1loop_count = 8num_threads = 4powersave = 0gpu_device = -1cooling_down = 1squeezenet min = 2.36 max = 2.50 avg = 2.41

再来看看GPU模式下的执行结果

root@localhost:~/ncnn/benchmark# ../build/benchmark/benchncnn 8 4 0 0 1loop_count = 8num_threads = 4powersave = 0gpu_device = 0cooling_down = 1squeezenet min = 2.73 max = 2.83 avg = 2.79

CPU 模式下执行squeezenet的平均结果为2.41, 而GPU 模式下的平均结果为2.79, CPU胜 ✌ ️。

至于其他项目的对比, 也是一样, 这个其实可以理解, 毕竟骁龙8 Gen 3 有8核CPU, 而 GPU 只有一个, 而且ncnn并没有特别优化高通的这款GPU。

本文主要是对高通骁龙8 Gen3的CPU和GPU 模式下的成绩做对比,算是自己和自己比,横向对比,后续会继续对高通骁龙8 Gen3的纵向对比, 看看和其他处理器相比怎么样。